The bad – using AI to overrule human decisionsīut what happens when users aren’t involved and don’t know how an AI ‘second opinion’ came about? This ensures better acceptance and trust.” It’s also important to note that the end users of the decision-support system –medical doctors– have been actively involved in its design. Oncologists are still responsible for the diagnosis, Gilhuijs remarks, but “they use the computer as a tool, just as they might use a statoscope. “We want to improve it even further to aid radiologists, by enabling them to focus on cases that require greater human judgement.” “We have now shown that the AI-powered system can reduce the number of false positives down to 64% without missing any cancer,” says Gilhuijs, in whose lab the algorithm was trained on a database of MRIs from 4,873 women in The Netherlands who had taken part in Van Gils’s study. “If the number of biopsies on benign lesions can be reduced, that would alleviate the burden on women and clinical resources,” Gilhuijs thought. Adding MRI to standard mammography detected approximately 17 additional breast cancers the downside being, 87.3% of the total number of lesions referred to additional MRI or biopsy were false-positive for cancer. The idea came after a study, led by his colleague and cancer epidemiologist Carla van Gils, suggested adopting Magnetic Resolution Imaging (MRI) in screening of women with extremely dense breasts could help reduce breast-cancer mortality in this group. Because how can we ever trust systems whose decisions we don't understand? How will we account for wrongdoings? And can they be prevented? Since the 1980s, Utrecht University has been building a diverse community of experts to ‘unbox’ artificial intelligence to allow the promises of this technological revolution to emerge. “People want someone to blame, but we haven’t reached a universal agreement yet.”Īs a society, discussing what these artificial intelligent systems should or should not do is essential before they start crowding our roads – and virtually every other aspect of our lives. “So, what if an autonomous car injures or kills someone? Who should be held responsible: Is it the distracted person sitting behind the self-driving wheel? The authorities that allowed the car on the road? Or the manufacturer who set up the preferences to save the passenger at all costs?” raises Nyholm, who penned an influential paper about the pressing ethics of accident-algorithms for autonomous vehicles. Many algorithmic systems are a ‘black box’: information goes in, and decisions come out, but we have little idea how they arrived at those decisions. "Their team of experts brings together traditional and digital engagement solutions to help customers transform their employee and customer experiences-at scale!", Verma continued.Knowing how AI systems reach decisions isn’t always clear or straightforward.

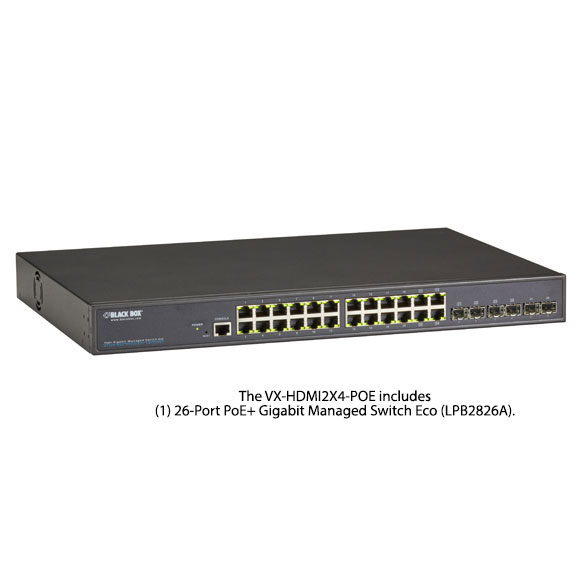

"We are thrilled to welcome GSN Australia to the Black Box family," said Sanjeev Verma, Executive Director of Black Box Limited and President and CEO of Black Box Corporation. As a result of this acquisition, Black Box will be able to offer its customers an even wider range of Cloud Contact Center and Digital Experience solutions that further strengthens its position in the Trans-Tasman markets and leverage the skills and expertise of our global customers. The acquisition of GSN Australia aligns with Black Box's growth strategy and expands its portfolio of products and services. PRNewswire Mumbai (Maharashtra)/ Dallas (Texas) / Pittsburgh, June 19: Black Box Limited (formerly AGC Networks Limited) (BSE: BBOX) (NSE: BBOX), a trusted IT solutions provider, announced that it has completed the acquisition of GSN Australia through its indirect wholly-owned subsidiary - Black Box Technologies Australia Pty Ltd.

0 kommentar(er)

0 kommentar(er)